Description

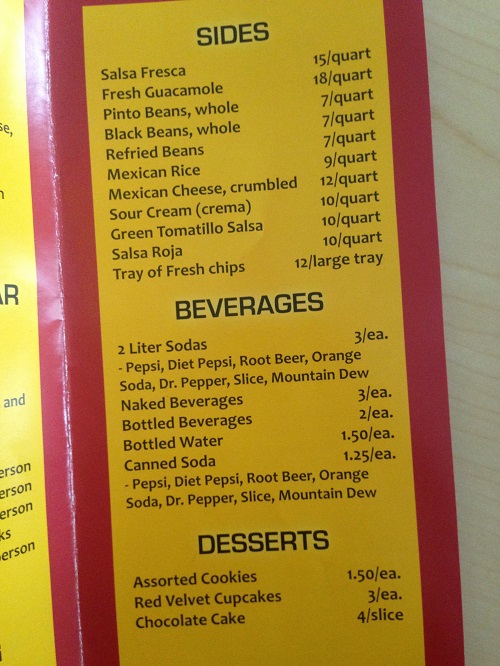

In this project, we have successfully developed an automatic English menu translation system to help non-English speakers overcome the difficulties of ordering meals in a foreign restaurant, with emphasis on studying different methods to increase the accuracy of the state-of-the-art OCR algorithm and finally increase the rate of correct recognition. The system is robust, fast, accurate and flexible while providing good user interaction experience. With good scalability, the system can be further extended to and find more applications in situations such as multi-language translation and larger database, or the information about the dish can be obtained via online searching. People may further extend the application to showing the information on a wearable VR device.

REFERENCES

[1] A. Heng, “L’Addition: Splitting the Check, Made Easy”.

[2] C. N. Nshuti, “Mobile Scanner and OCR (A first step towards receipt to spreadsheet)”.

[3] M. Jin, L. X. Wang, B. Y. Zhang, “Real Time Word to Picture Translation for Chinese Restaurant Menus,” EE268 Project Report, Spring 2014.

[4] R. Smith, “An Overview of the Tesseract OCR Engine,” Tesseract OSCON, Google Inc.

Image set-based collaborative representation for face recognition

Text and non-text detection and text recognition in an image

https://web.stanford.edu/class/ee368/Project_Autumn_1516/Reports/Wang_Chen_Lang.pdf

Reviews

There are no reviews yet.