Description

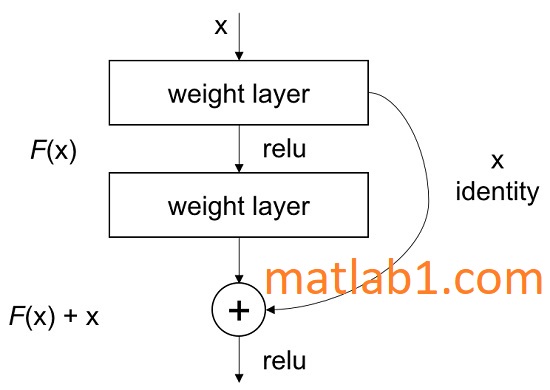

ResNet was proposed by He et al. ( https://arxiv.org/pdf/1512.03385.pdf) and won the ImageNet competition in 2015. This method showed that deeper networks can be trained. The deeper the network, the more saturated the accuracy becomes. It’s not even due to overfitting or due to the presence of a high number of parameters, but due to a reduction in the training error. This is due to the inability to backpropagate the gradients. This can be overcome by sending the gradients directly to the deeper layers with a residual block as follows:

Every two layers are connected forming a residual block. You can see that the training is passed between the layers. By this technique, the backpropagation can carry the error to earlier layers.

The model definitions can be used from

https://github.com/tensorflow/tensorflow/tree/r1.4/tensorflow/python/keras/_impl/keras/applications.

Every layer in the model is defined and pre-trained weights on the ImageNet datasezt are available.

Reviews

There are no reviews yet.