Description

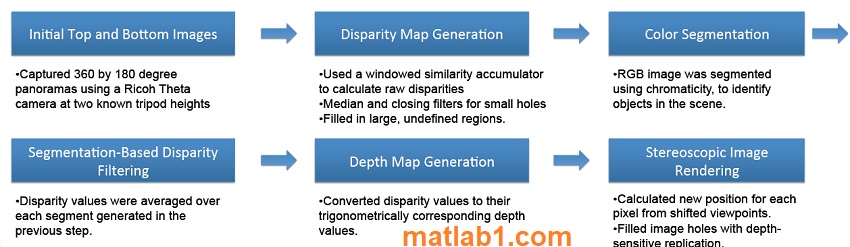

In this work, a technique for rendering stereoscopic 360 images from a pair of spherical images, for virtual reality applications, is presented. The spherical images are captured using a Ricoh Theta1 camera from two viewpoints separated by a known, vertical displacement.

Using vertical, rather than horizontal, camera displacement allows the computation of depth information in all viewing directions, except zenith and nadir, which is crucial for rendering an omnidirectional stereo view. Stereo correspondence information from a threedimensional similarity accumulator is combined with the RGB information in the captured images to produce dense, noise-free disparity maps that preserve edge information.

From cleaned disparity maps, depth information is calculated from disparity and pixels are remapped to a new viewpoint in which artifacts are filled before display on a Samsung Gear VR 2. Using our method high quality stereo images can be rendered with a reasonably good perceived depth.

MATLAB Implementation of segmentation-based disparity averaging

Reviews

There are no reviews yet.