As part of the web form submission and in order to download users’ tweets, the users must be followed on Twitter. Following users as they participate via the web form and downloading their tweets is accomplished by using three programs developed as part of this work. All use Java and the Twitter library for interfacing with Twitter .

The first program is a library to handle authentication using the thesis Twitter account. Twitter uses an interactive OAuth authentication system which requires authenticating applications to ask the program operator to visit a web address. Upon opening the web address, the operator is shown a pin to enter in the application, allowing it to authenticate with Twitter using the operator’s Twitter account. Once the application has authenticated, it is possible to store the generated keys and avoid authenticating in the future. The library handles storage and retrieval of the keys, or generating them if they can not be found.

The second application handles following participating Twitter users and updating database fields to reflect follow status. It is run after a user submits the web form shown in Figure and described in subsection . First, after a new participant’s information is entered in the database, the application queries the database for users marked as new or pending. Next, it queries Twitter for users followed by the thesis account (this returns users as followed or pending). Using set logic, the application determines what users need to be followed on Twitter and follows them.

Feature type names

Abbreviation Features and Extraction

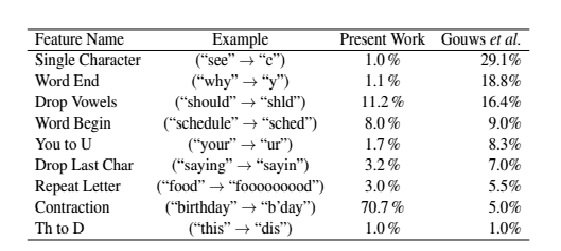

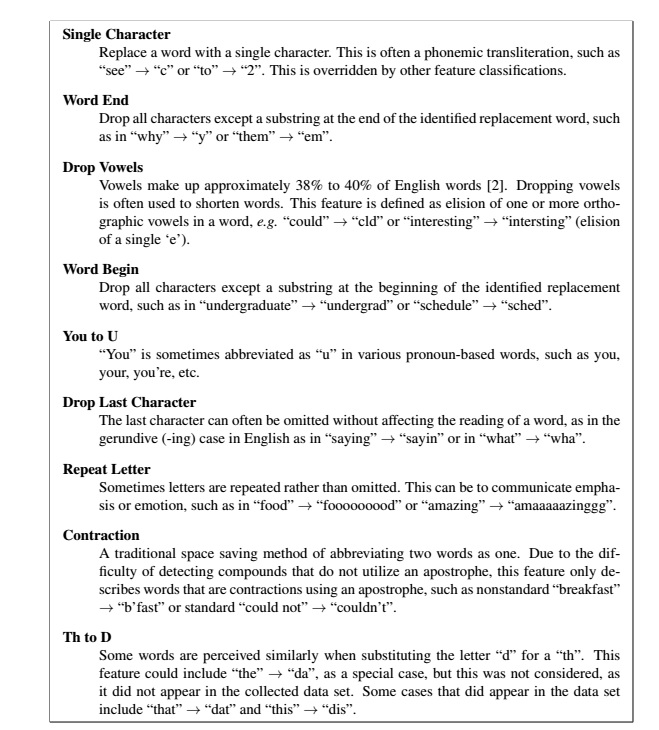

In order to develop a model for predicting Twitter user age, the collected tweets are analyzed for abbreviation features. The abbreviation features used are those found by Gouws et al. to be most frequent in an overall Twitter corpus . Those features are descriptively titled single character, word end, drop vowels, word begin, you to u, drop last character, repeat letter, contraction, and th to d. The usage frequency of abbreviation patterns found in the collected data set are compared to those found by Gouws et al. in Table Features are described with more detail in Figure and are discussed in relation to the collected data set below.

The tweets collected using the Java framework described in subsection were fed to a python framework for further analysis. The text of each tweet was given to the cleanser framework developed by Gouws et al., which attempted to text-normalize each tweet into a standard English sentence. Different stages of the text normalization utilized functions from the python Natural Language Toolkit (NLTK) framework and the SRI Language Modeling Toolkit (SRILM) . The algorithm is outlined in Figure For each tweet, remove any series of punctuation determined to be emoticons, as well as HTML bracket artifacts, should they exist. A simple regular expression approach is taken to recognizing emoticons, as the problem is in itself quite difficult,as outlined by Bedrick . Because of this difficulty, only a small subset of all emoticons could be correctly identified, so such features are not included in this work’s analysis. Tokenize each tweet into individual word tokens and punctuation. The NLTK tokenize.punkt library is used for tokenizing sentences, as it is effective at separating words and punctuation, as well as separating contraction words into multiple tokens, e.g. “shouldn’t” ! “should n’t”. Generate substitution candidates for each OOV token using a string subsequence kernel. Each candidate is paired with a probability used as an evaluation of the similarity to the original OOV token. Tokens that are not OOV are assigned a substitution candidate the same as the original token and a probability of 1. Probabilities are generated by the SRILM ngram program using n-gram language models

based on Gouws et al.’s LA Times corpus and Han and Baldwin’s Twitter corpus Aword mesh (a confusion network that can be translated into a probabilistic finite-state grammar) is generated from the list of candidates and probabilities, which is given to the lattice-tool program of SRILM to decode into a most likely cleaned sentence, consisting of the candidates with thelowest expected word error. (5) The uncleaned original and tokenized texts are recorded, along with a list of pairs consisting of an OOV token and its generated substitution. Non-OOV tokens are retained as part of the tokenized text, but since they are not abbreviated, they are not recorded in the substitution pairs, as the pairs are used for abbreviation feature generation.

The abbreviation features are determined on a per-tweet level, based on a per-token analysis using the algorithm outlined in Figure 6. The input is the list of token and substitution pairs gen erated by the algorithm above. For each tweet, each token and substitution pair are passed to an abbreviation-finding function.The function applies a series of regular expressions and

substring checks, which correspond to each of the defined abbreviation features. Each token pair is thereby assigned an abbreviation feature classification representing which abbreviation type it matches. The tweet’s set of token abbreviation feature classifications are consolidated into a single percentage feature vector for each tweet. The values in the vector reflect the percentage.

Description of the nine abbreviation features from Gouws et al.. Each word pair was

assigned one feature type classification. Some feature types overlap, such as drop last character

and word begin. In these cases, the more specific classification was assigned (drop last character).

Text cleanser algorithm provided . This work added some customization in tokenization and small fixes, but otherwise the algorithm is the same.

of tokens in the tweet which utilize each abbreviation type. The percentages are further gen eralized to a boolean vector, which describes if a given abbreviation feature type was used at all in a tweet. Equivalent experiments were run using the percentage vectors and the boolean vectors and compared. Studies have found that enough information can often be found in a single tweet to do effective binary classification, such as on gender . By comparing the results of classification using the percentage and boolean vectors, it can be determined how much abbreviation feature in formation is necessary for a good classification. Additionally, combining boolean and percentage features with word n-gram features, as well as best first feature selection or principal component analysis feature extraction, was found to further improve classification results.

Data Set Analysis

A total of  Twitter users supplied their demographic information. Of those

Twitter users supplied their demographic information. Of those  participants,

participants,  had tweets. This is in part due to several users having no tweets, and some who disappeared from Twitter after submitting the web form data. This means

had tweets. This is in part due to several users having no tweets, and some who disappeared from Twitter after submitting the web form data. This means  of participating users have no tweets. According to Beevolve Technologies, as many as 25% of users have never tweeted . In the present data set, the average number of tweets submitted by a user is 1538. Beevolve Technologies presents separate figures for tweet frequencies based on gender, which, when combined, give an.

of participating users have no tweets. According to Beevolve Technologies, as many as 25% of users have never tweeted . In the present data set, the average number of tweets submitted by a user is 1538. Beevolve Technologies presents separate figures for tweet frequencies based on gender, which, when combined, give an.

Abbreviation feature assignment algorithm. The classifications are assigned such that

features which are subsets of other features are assigned first. Some more specific features are

subsets of other features. For example, any drop last character feature is also a word begin feature.

Basic information about the present data set

average for tweets per person of 590, much lower than in the collected data set Basic descriptive data set information is shown in Table 2. From the participants, over a hundred thousand tweets were collected, comprising 1.65 million tokens. Of those tokens, about 1.4 million were words and around two hundred thousand were punctuation. More detailed information about the collected tweets from the 66 users that had tweets follows in subsection Information about the demographic data collected as part of the data set (all 72 users) is in subsection .