Multi-Agent Systems

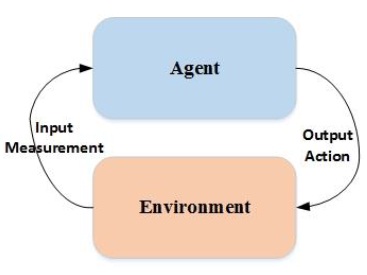

Basically, an agent is a computer system which can decide to take an action in order to satisfy designed objectives. Agents that must operate robustly in rapidly changing, unpredictable, or open environments, where there is a significant possibility that actions can fail are known as intelligent agents, or sometimes autonomous agents .Figure 4.1 gives an abstract, top-level view of an agent. In this diagram, we can see the output action taken by the agent in order to affect its environment. In most domains of reasonable complexity, an agent will not have complete control over its environment. It will have at best partial control, in that it can influence it. From the point of view of the agent, this means that the same action performed twice in apparently identical circumstances might appear to have entirely different effects, and in particular, it may fail to have the desired effect

Figure 4.1 Abstract schematic of an agent

Different Agent’s Environment Types:

In this section, different agent’s environments will be discussed as follows

Accessible vs inaccessible

An accessible environment is one in which the agent can obtain complete, accurate, up-to-date information about the environment’s state. Most moderately complex environments (including, for example, the everyday physical world and the Internet) are inaccessible. The more accessible an environment is, the simpler it is to build agents to

operate in it.

Deterministic vs non-deterministic

As we have already mentioned, a deterministic environment is one in which any action has a single guaranteed effect—there is no uncertainty about the state that will result from performing an action. The physical world can to all intents and purposes be regarded

as non-deterministic. Non-deterministic environments present greater problems for the agent designer.

Episodic vs non-episodic

In an episodic environment, the performance of an agent is dependent on a number of discrete episodes, with no link between the performances of an agent in different scenarios. An example of an episodic environment would be a mail sorting system Episodic environments are simpler from the agent developer’s perspective because the agent can decide what action to perform based only on the current episode—it need not reason about the interactions between this and future episodes.

Static vs dynamic

A static environment is one that can be assumed to remain unchanged except by the performance of actions by the agent. A dynamic environment is one that has other processes operating on it, and which hence changes in ways beyond the agent’s control. The physical world is a highly dynamic environment

Discrete vs continuous

An environment is discrete if there are a fixed, finite number of actions and percepts in it. Russell and Norvig consider a chess game as an example of a discrete environment, and taxi driving as an example of a continuous one.

Intelligent Agent Characteristics:

In this section, we discuss about important characteristics and features of an agent as follows :

Reactivity: intelligent agents are able to perceive their environment, and respond in a timely fashion to changes that occur in it in order to satisfy their design objectives.

Pro-activeness: intelligent agents are able to exhibit goal-directed behavior by taking the initiative in order to satisfy their design objectives.

Social ability: intelligent agents are capable of interacting with other agents (and possibly humans) in order to satisfy their design objectives.

Intelligent Agents Architectures

For formalizing the abstract concept of the agent, it is assumed that the state of the agent’s environment can be presented as a set ? = {?1, ?2, ⋯ }. At each state, the agent can take action from action space which is defined by ? = {?1, ?2, ⋯ }. Therefore, an agent can be presented as a function: ?∗ → ?, which maps state space (sequences of states) to action space . Furthermore, the non-deterministic characteristic of the environment can be modeled by function :

which takes the current state of the environment and an action (performed by the agent), and maps them to a set of environment states. The following are different architectures for intelligent agents :

Purely Reactive Agents

When an agent make a decision for taking an action without referring to its previous experience (history), it is called a purely reactive agent. The purely reactive agent can be represented by a function:

Perception Agents

In this type of agent’s architecture, agent is divided into two sub-functions called ‘observation’ and ‘action’ functions. The observation function captures the ability of the agent to observe its environment, whereas the action function presents the decision making process of the agent. This architecture is shown in Figure 4.2. The observation function can be implemented either in a hardware platform for the hardware agents in physical test cases or in a software platform by programming the software agents.The output of the observation function is called percept-perceptual input. The observer function can be presented as:

![]()

which maps environment states to percepts.

The action function is also defined by :

which maps sequences of percepts to actions.

Figure 4.2 Perception agent architecture

Agents with States

This type of agent, as shown in Figure 4.3, has internal data structure, which is typically used to record information about the environment state and history. By assuming ? to be the set of all internal states of the agent, an agent’s decision making process is then

based on this information. The observation function for a state-based agent is unchanged, mapping environment states to percepts as before:

![]()

The action-selection function action is now defined a mapping from internal states to actions:

An additional function next is introduced, which maps an internal state and percept to an internal state:

![]()

Figure 4.3 Agents with states architucture

Additionally, the agents can be categorized into the following groups in terms of how the action function is implemented:

Logic based agents—in which decision making is achieved through logical process.

Reactive agents—in which decision making is implemented in form of direct mapping from state to action.

Belief-desire-intention agents—in which decision making depends on the manipulation of data structures representing the desires and intentions of the agent.

Layered architectures—in which decision making is achieved through various software layers, each of which is more-or-less explicitly reasoning about the environment at different levels of abstraction