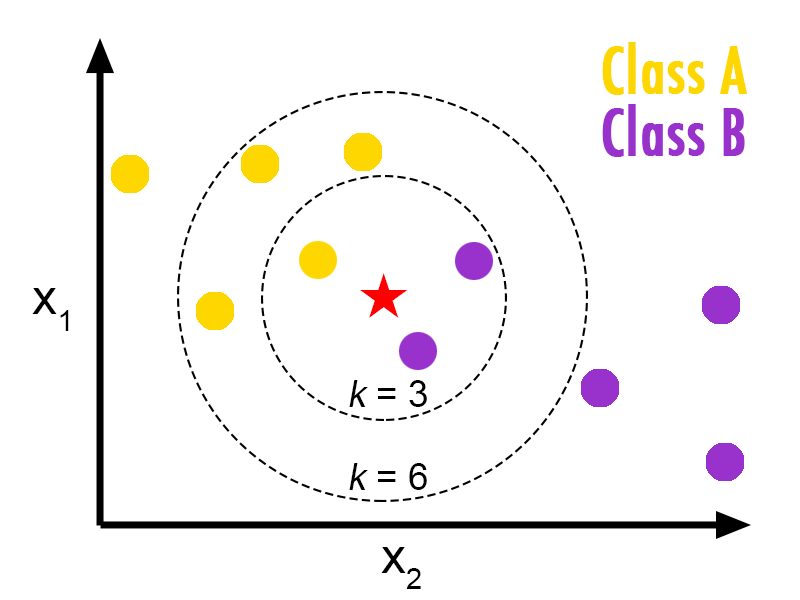

KNN is a straightforward algorithm that stores every single accessible case and characterizes new cases taking into account a similarity or closeness measure [25]. KNN is a non-parametric lazy learning algorithm [26].

By non-parametric technique, it implies that that it does not make any presumptions on the fundamental information appropriation. This is really valuable, as in this present reality, the greater part of the reasonable information does not comply with the regular hypothetical presumptions made.

Non parametric calculations like KNN act the hero here. Being a lazy algorithm, Knn does not use training data points for generalization [26]. This implies there is very minimal training and the phase of training is very quick.

Absence of generalization implies that KNN keeps all the preparation information (data for training). All the more precisely, all the preparation information is required amid the phase of testing. We used k-nearest neighbor method as the baseline classification method because of its simplicity and its efficacy [27].