Cooperation

Autonomous driving is an extremely difficult task requiring absolute reliability under a great variety of situations. Currently, it is almost impossible for a single sensor to handle the complexity due to limited perspective and intrinsic weakness such as a camera’s bad adaptability to complicated light conditions. Researchers therefore propose collaborative methods to stretch the limit.

In already commercialized applications of autonomous driving, multiple sensors on the same vehicle collaborate. Collaborations of local sensors are much easier than inter-vehicle collaborations because the sensors’ locations are known precisely and the wired data link to the processor is high-throughput and low-latency. Here, we do not discuss collaborations among sensors of different types such as RADAR system (ranging) and camera (imaging). Cooperation among sensors of similar types usually is for extending the field of view.

For inter-vehicle cooperation, parties in the network can share raw data, features, or detection results, with reduced data size. Cooperative perception does not assume the majority of cars in traffic to be intelligent or contactable. Sharing across vehicles particularly aims at ameliorating occlusion. Raw data is the output of the sensors such as images and point cloud, and detection results are labels of objects. Feature sharing is arguably as effective as raw data sharing with less computation and communication cost [1]. But the compatibility issue is huge. That is, cars participating in feature sharing must have a strict agreement in feature extraction. Besides the computation and communication requirements, another challenge for inter-vehicle collaboration is localization. Regardless of what to share, the shared data needs to be transformed to a global coordinate system, making accurate relative positioning a necessity.

Communications

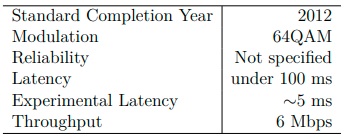

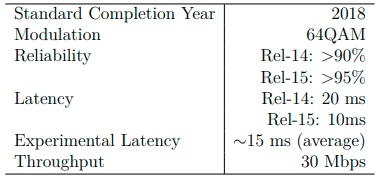

The throughput and latency requirements for multi-vehicle cooperation must not be too far from the capacity of foreseeable technologies. The current technologies and the corresponding communication standards for intelligent transportation systems include DSRC with IEEE 802.11p and Long Term Evolution (LTE) C-V2X with 3GPP (The 3rd Generation Partnership Project) Rel14/Rel-15. The IEEE-based DSRC is similar to WIFI while the 3GPP-based C-V2X utilizes the cellular network. The key features of DSRC and LTE C-V2X are listed in Table 1 and Table 2.

Key Features of DSRC and IEEE 802.11p

Key Features of LTE C-V2X and 3GPP Rel14/Rel-15

Because in the DSRC protocol the receiver does not send an acknowledgement frame back to the transmitter, DSRC is more suitable for broadcasting. DSRC supports ultra-low latency while the data rate is low. An example basic safety application of DSRC is that when a car brakes, it broadcasts the decision of braking to alert the cars around.

The 3GPP-based C-V2X is designed very differently. DSRC is ahead of LTE CV2X for safety applications with its advantage of low latency, but the high data rate of LTE C-V2X opens more possibilities. The quality of service (QoS) requirements are listed in Table 4.3. The raw data sharing approach taken by this thesis is in the task group of extended sensors. There is still a big gap for those advanced use cases.

The next generation of C-V2X, New Radio (NR) C-V2X and the 3GPP Rel-16/Rel17 standard, is expected to allow high-volume burst transmission and <10 ms latency [2] with the help of 5G.

Localization

Localization for an intelligent vehicle is the task to know its own location and pose. It can be roughly split into two categories: with or without a prior map. Indoor localization usually fall into the former type. Simultaneous localization and mapping (SLAM) algorithms have been developed to tackle the task. For outdoor localization, the Global Navigation Satellite System (GNSS) provides positioning service.

The map is the coordinate system using longitude and latitude. The most popular localization technology for driving navigation, the Global Positioning System, is one type of GNSS. We here refer to positioning in the geographic coordinate system as absolute positioning.

For the purpose of raw sensor data fusion, our goal is to obtain an accurate transformation from one sensor’s perspective to another perspective. As we work under a collaborative framework for object detection, we also allow cooperation in the relative

positioning problem. One trivial solution to this problem is to let the ego vehicle to use its onboard ranging sensors such as RADAR, LIDAR, or depth camera to directly measure the distance to another vehicle. First, the target vehicles have to be identified for further cooperation. DSRC is against any identification in order to protect privacy. Second, if relying on the ranging sensors, the ego vehicle can only collaborate with unblocked vehicles in the field of view of the sensors, which greatly undermines the benefits of cooperative perception. The good side of this solution is that ranging sensors can easily position other vehicles at very high accuracy. Another group of solutions is based on GNSS. The baseline is that each car obtains its absolute position and then calculate the differences. This approach is not viable due to the propagation of considerable errors in GNSS measurements. A method named differential GNSS, which estimates and corrects the offsets in the measurements using other receivers at known locations, can improve the GNSS accuracy. Because the errors for vehicles close to each other are correlated, the relative positioning error can be reduced with statistical methods. Significant processing time is required though. By simply exchanging the GNSS measurements, the standard deviation is around 3.5 meters, and further processing can reduce it to around 1 meter [3]. The challenge of localization has been recognized by standard makers. It is claimed that the future NR C-V2X will support sub-meter positioning by communicating with road-side infrastructures.

Besides the location, the direction that the car is facing is also critical. While the difference in locations decides the translation, the difference in directions decides the rotation. The three angles: pitch, yaw and roll should all be available. Modern digital 3D compasses can provide very reliable measurements. Synchronization is also required to prevent misalignment in time. GPS is one of the sensors that can do that. Synchronization is also supported by the 3GPP-based C-V2X protocols [4].

Scenarios of Interest

Cooperative perception requires a considerable amount of data sharing. The computation and communication resources are very limited as mentioned in the previous section. Therefore, cooperation should only be active under specific circumstances for optimal efficiency. Several scenarios, each representing an interesting traffic situation, are listed in this section to further clarify the problem.

Relative Positions

The relative positions of collaborating vehicles determine the patterns of overlapping. The LIDAR point clouds used by this thesis has no perspective distortion and can be easily fused. If the fusion is done with 2D images, there will be more constraints. In this work, we use four setups to generalize possible traffic situations in our problem formulation. The first setup considered here is tailing as shown in Figure 1. In this setup, the car in the front barely gets anything because the view of the car behind is mostly blocked. For the car in the back, its field of view is much more extended and can react to the traffic more promptly. In this case, sharing is helpful but not very efficient, especially when there is a long line of vehicles.

A more interesting scenario is two cars driving towards each other, as shown in Figure 2. In this case, the fields of view of two cars are heavily overlapped. When the traffic in between is complicated, with other cars blocking each other, fusing views from two opposite sides gives the participating vehicles a better grasp. The distance between the two cars can be changed so the overlapping can be tuned in two directions to study its effect on the object detection performance.

Another type is two vehicles facing perpendicular to each other, as shown in Figure 3. This setup is common at intersections. Point clouds taken from these two perspectives should contain the most information among all listed cases because it is unlikely for an obstacle to block the two views simultaneously. However, the disadvantage is that if one of the cars is turning, the errors in measuring the changing value of yaw can cause a severe misalignment in the fused point cloud.

Two vehicles facing perpendicular to each other

The last type is vehicles in turns. In order to keep the data clean, a roundabout is used because collisions and blocking are too often in the center of an intersection.

The yaw angle is constantly changing while a vehicle is turning. Changes in yaw introduce much more errors than moving straight if imperfect location and rotation measurements and delay in time are present, which surely happens in reality.

Locations

Cooperative perception is not suitable for all segments of roads. To avoid invalid fusing effort, collaborations are ideal when the relative speeds of cars are low. Also, the additional resources should not be wasted in simple lane following situations in over-crowed traffics. Therefore, we pick the test locations to be intersections. To be specific, three types of intersections are used. A crossroads is the example of large intersections. A T-junction represents smaller intersections. And the roundabout scenario is the most complex case.

[1] Q. Chen, X. Ma, S. Tang, J. Guo, Q. Yang, and S. Fu, “F-cooper: Feature based cooperative perception for autonomous vehicle edge computing system using 3d point clouds,” in Proceedings of the 4th ACM/IEEE Symposium on Edge Computing, ser. SEC ’19. New York, NY, USA: Association for Computing Machinery, 2019, p. 88–100. [Online]. Available: https://doi.org/10.1145/3318216.3363300

[2] “5g americas white paper: Cellular v2x communications towards 5g,” 5G Americas, Tech. Rep., March 2018.

[3] F. de Ponte M¨uller, “Survey on ranging sensors and cooperative techniques for relative positioning of vehicles,” Sensors (Basel, Switzerland), vol. 17, no. 2, January 2017. [Online]. Available: https://europepmc.org/articles/PMC5335929

[4] S. Chen, J. Hu, Y. Shi, L. Zhao, and W. Li, “A vision of c-v2x: Technologies, field testing, and challenges with chinese development,” IEEE Internet of Things Journal, vol. 7, no. 5, pp. 3872–3881, 2020.